AI in GTM & RevOps (2/4): Requirements and Regulations

Explore the intersection of AI deployment and EU regulations in this guide. Learn to leverage AI in GTM and RevOps while staying compliant.

Are you hyped about AI?

Did the first blog in the AI in GTM and RevOps series got you hooked?

Do you want to deploy it in your business?

Yes!

Well, I don’t want to be the party popper but we need to talk about requirements and regulations.

Nobody wants to talk about requirement and regulations.

Table of Contents

Requirements for AI deployment

Deploying AI in a business requires preparation. Without the proper set-up it equals throwing money out of the window.

The requirements are not necessary new. The relate also to other aspects of your business. If you have a RevOps team then you probably have heard of those topics in a different context.

Data and IT

ChatGPT, Bard, Claud and co, all require a huge amount of clean data to provide good results. You sometimes see these models give you the obviously wrong answer - often called hallucination. That happens if the model has conflicting data points.

It is less of an issue if ask ChatGPT who was the second presidents of United States and it gives you the wrong answer (it was John Adams). But is a problem when you base your business decisions on it.

That is why the data preparation part is important for businesses.

80% of AI deployment time will be spent on data preparation.

CompTIA, the Computing Technology Industry Association from the US

From a RevOps perspective this makes sense. We see the same when using normal analytics and reports. Bad data makes data unusable or skewed.

Clean data

One key requirement for AI to work is clean data. This includes:

Data free from duplicates

Consistent data format (e.g. date formats always the same)

Data free from errors

Structured data

Another important part is to structure your data in logical relationships. This part is mostly done by the CRM itself but it becomes important when using a lot of custom data. A bad example of data structure in a CRM is to store company specific data on an opportunity. That would duplicate data that is already on the account. Further, with every new opportunity for the same account it would create even more duplicates. This problems is further enhanced by creating custom objects with a poor relationships.

It becomes important to have a good data structure that is clear to a human and to an AI. In our blog, CRM database design, we covered the topic in more detail.

Integration

Many business systems are siloed. That is a problem for businesses that want to be data driven - RevOps is all about removing these silos.

For AI, this becomes even a bigger problem. An AI requires access to a wide set of data to make sense of it. Should the different systems be siloed then the AI can’t access the data to run its models.

Building out proper integration is crucial for any AI to function. Depending on your current state this alone can be complex task.

Data lakehouse

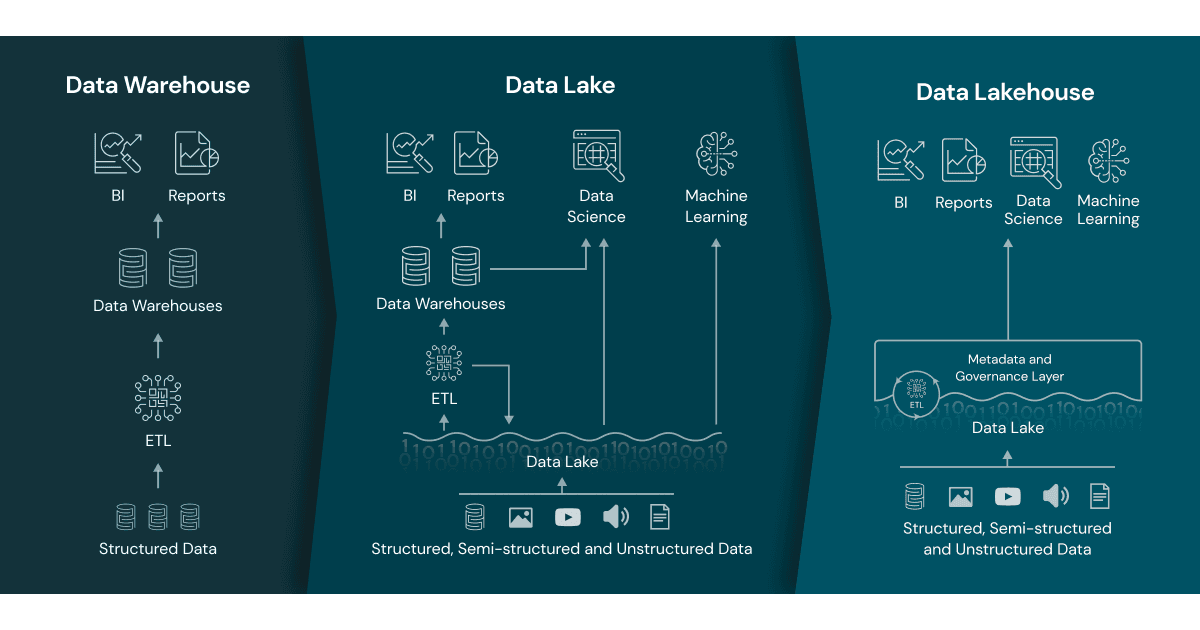

Investing in data lakehouses is crucial for businesses that want to deploy AI on a large scale across the business. To understand what a data lakehouse is, we first need to understand what is a data warehouse and data lake.

A data warehouse is like a well-organized filing cabinet. Excellent for straightforward, structured data analysis but less flexible. A data lake is like a vast storage unit, great for its capacity and variety but potentially cumbersome to navigate.

The data lakehouse combines the best of both, offering an open architecture that combines the flexibility and scale of data lakes with the management and data quality of warehouses.

Databricks have a great guide on this topic.

The real magic happens when AI enters the scene. Data lakehouses provide an ideal environment for AI and machine learning algorithms. That is due to their blend of structured organization and support for diverse data types.

They allow these tools to access a wide array of data, from structured tables to raw, unprocessed information. This accessibility enables AI to uncover deeper insights, recognize patterns, and make predictions with greater accuracy.

Building and managing a data lakehouse requires a lot of resources in terms of people and cash. In addition, one needs a lot of data.

That is why the saying “data is king” is more important than ever. You probably also heard that large corporates have an unfair advantage for AI use? Well, they have the resources and they tend to have tons of data. They can run the AI models on their proprietary data and gain an unfair advantage.

Executive sponsors

Executive sponsors for AI projects are necessary due to the investment. As we have seen, AI projects require a proper Data and IT infrastructure to function optimally. This will require a lot of resources to deploy and optimise.

That is why executives need to agree on AI projects. They will require significant investment and change management.

Skill and expertise

Deployment of AI programs will require a new set of skills. Those include skills for the preparation of the AI projects, the deployment, and to maintain and use it.

AI is the future for business, so it makes a lot of sense to develop those expertise internally.

Should those skills be lacking then it makes sense to consider working with consultants to assist with the first deployments and train your in-house team.

At Revenue Wizards we can help you with AI deployment services for GTM teams. You can contact us here.

Change Management

AI will change how your business operates. Roles and responsibilities will change. Humans will need new skills to work with AI and skills that were once useful will become obsolete.

This is a disruptive change for any business. It is important to be aware of all those changes and prepare for those. The risk of a poorly executed AI project is that it will disrupt the business more than doing any good.

AI safety and regulation

AI started back in the 1951, but its mainstream commercial success just kicked of in the past year. Now, it is more in the public domain and therefore regulator are more concern with its safe use and regulations.

Public AI vs Private AI

A public AI like ChatGPT has the advantage that it trained on a vast amount of data. A privacy risk is that they use your private data and your business data to train their models. That is what happened to Samsung when employees entered private business data into ChatGPT. Then this input was recycled and provided to other users as answers - leaking confidential information. As a consequence, Samsung banned the use of public generative AI tools within their business.

Companies are now investing in private AI models. Those models work with company internal data.

Remember to make this work a company needs to fulfil the requirements for AI deployment and especially invest in a data lakehouse.

Safety in terms of data breaches is enhanced in those models as those are not letting internal data out. Enterprises and businesses with sensitive data are heavily investing in private AI for those reasons.

AI hallucination

When an AI hallucinates then it generates content that makes no sense or is untrue to us humans. This is a huge risk for businesses as decisions can not be based on false information.

To prevent hallucinations business needs clean data (see requirements again) and a large amount of data. With large amounts of data comes again the problem of exposing more data to the AI which is not always simple - for example when a company has very sensitive data like financial or health data.

AI Regulations

AI is such a big topic that it impacts society as a whole. Therefore it is no surprise that various governments are working on AI regulations.

Those regulations will impact how businesses use AI. The table below shows some of the most significant AI regulations.

Table: AI regulations around the world

Country

Regulation

Status

US

Algorithmic Accountability Act 2023 (H.R. 5628)

Proposed (Sept. 21, 2023)

AI Disclosure Act of 2023 (H.R.3831)

Proposed (June 5, 2023)

Digital Services Oversight and Safety Act of 2022 (H.R.6796)

Proposed (Feb. 18, 2022)

Canada

Artificial Intelligence Data Act (AIDA)

Proposed (June 16, 2022)

EU

EU Artificial Intelligence Act

Confirmed ( Feb. 2, 2024)*

Will be enacted in April.

China

Interim Administrative Measures for the Management of Generative AI Services

Enacted (July 13, 2023)

The most advanced in terms of security is the EU Artificial Intelligence Act.

EU Artificial Intelligence Act

The European Union is working on a regulatory framework for the use of Artificial Intelligence within Europe. The framework would impact every company bringing AI products on the European market. The regulation is since 2021 in proposal stage. On 2. February 2024, the EU deputy ministers unanimously reached a deal. Now, it will be enacted in around April 2024.

Why are they doing this?

AI in the EU market should be safe and respect existing EU law

Build a legal framework to boost AI innovation in the EU

Create a governance framework and enforcement methods

Who is impacted?

Companies building and serving AI in the EU

Foreign companies serving the EU market

Foreign companies value chain captures data in the EU

(even if not offering the product in the EU)

How does the EU AI Act classify risk?

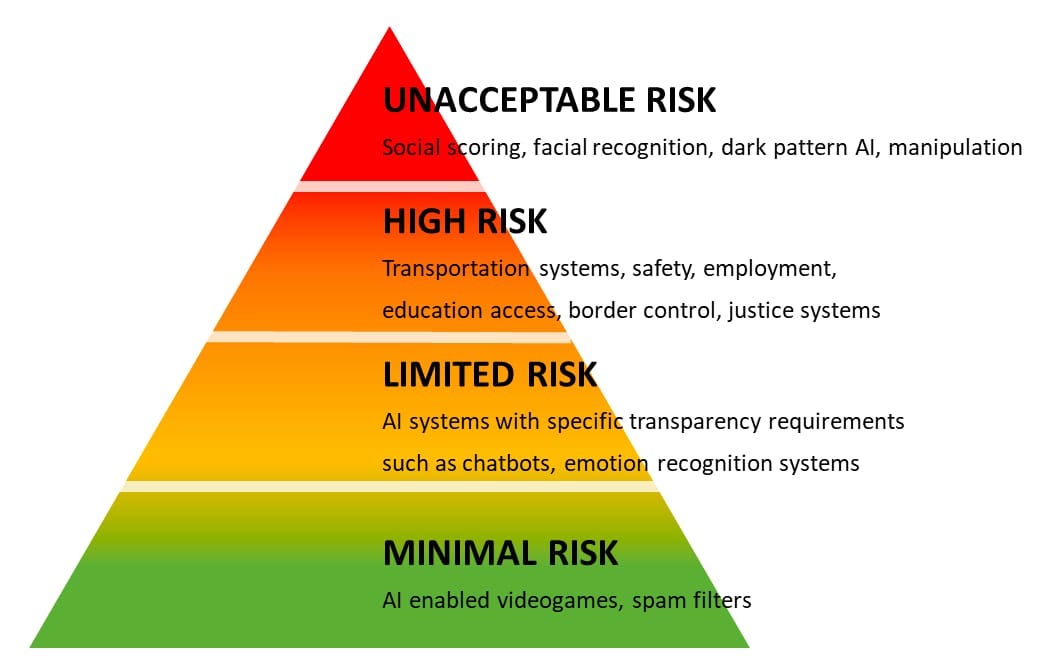

The AI Act would classify AI solutions into 4 risk categories:

Unacceptable Risk

High Risk

Limited Risk

Minimal Risk.

Unaccepted risks are a threat to the safety, livelihoods and rights of people.

High Risk would be AI systems that support the recruitment process or provide credit scoring. Those high risk systems would require receiving a certification before bringing it on the market. In addition, every significant update would require a recertification.

Limited risk requires transparency that one is interacting with an AI. For example, a chatbot needs a clear indication that the user is talking to a chatbot. The minimal risk category would be free to use AI software. That might be an AI in a video game or a spam filter. Most of the AI currently on the market fall into this category.

What about Transparency & Copyright law?

There are dedicated rules for general-purpose AI systems (GPAIs). They have to ensure transparency along the value chain. These rules include drawing up technical documentation, complying with EU copyright law and providing detailed summaries of the content used for training.

What can you do to prepare for the AI act?

Asses where your current (and future) tools fall within this framework.

Understand where you stand in the AI value chain and what your compliance requirement are.

Create a governance framework and risk management control system.

We believe that the regulation is a step in the right direction but it can also be a disadvantage.

An uncontrolled use of AI will create massive consequences in society ranging from abuse to unemployment. While at the same time strict regulation can slow down innovation. Meaning if businesses in the EU are impacted by a strict AI regulation but businesses in the USA are not then this will create a clear competitive disadvantage for companies in the EU.

Now that we set the foundation, we can talk about how AI tools will change GTM and RevOps. That will be the topic for next week blogs.

Revenue Wizards is a hands-on Revenue Operations Consultancy for growth companies focusing on efficiencies. We provide GTM & RevOps support with the goal of Revenue growth, GTM cost reduction, and Profitability. You can learn more about Revenue Wizards here.

Zhenya Bankouski is Co-Founder and Revenue Operations Partner. You can follow him on LinkedIn 🔔 where he posts regularly with practical advice on building efficient RevOps teams and truly data-driven organizations.

Haris Odobasic is Co-founder and Revenue Strategy Partner. Enjoys bringing strategy to life with RevOps.